Differential Photometry of Delta Scuti Variable Star “YZ Boo”

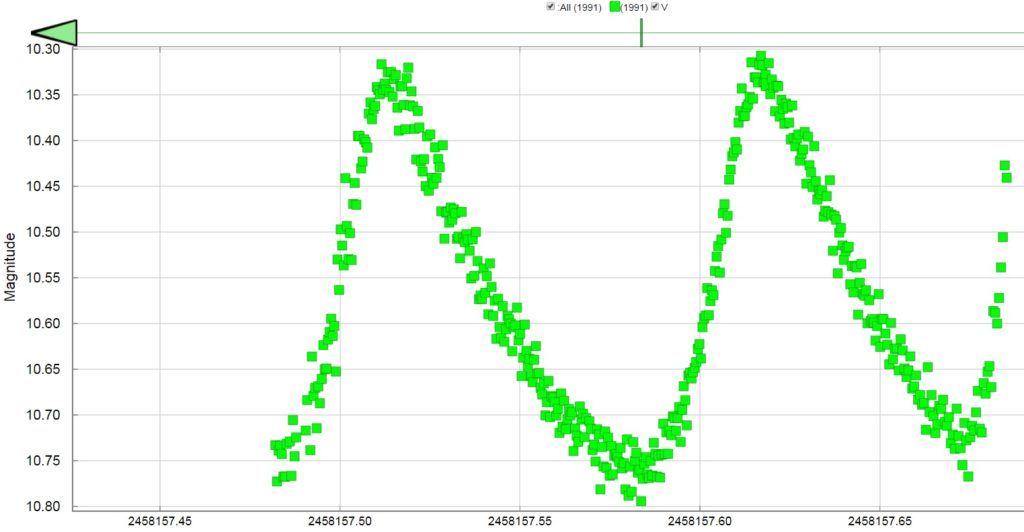

I am always interested in finding things to do with my kit when the Moon interferes with normal wideband astrophotography. Last night the Moon was full so I turned my attention to a variable star in Bootes designated “YZ Boo”. It is a 10th magnitude star that fluctuates in brightness from approximately 10.3 to 10.8 with a period of 2.5 hours. It is one of my favorites:

Seeing conditions rapidly declined, not only due to the Moon, but also a storm front that moved swiftly towards me. When I began imaging there was a feeling of dampness in the air but nothing severe. By the end of the 2 hour session my equipment was wet with dew (thankfully my DIY dew heater bands around the objective lens and one around the nose of the CCD prevented disaster.)

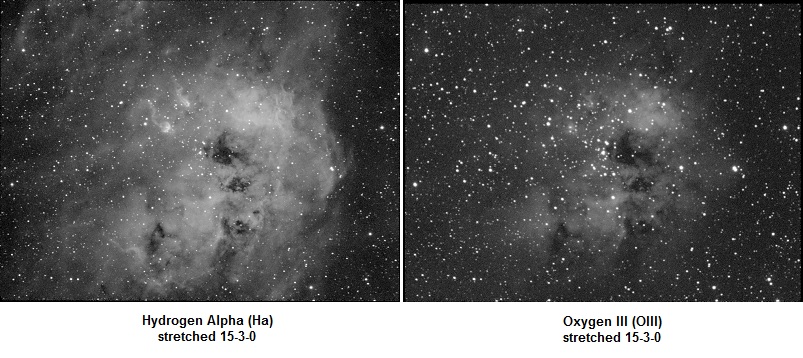

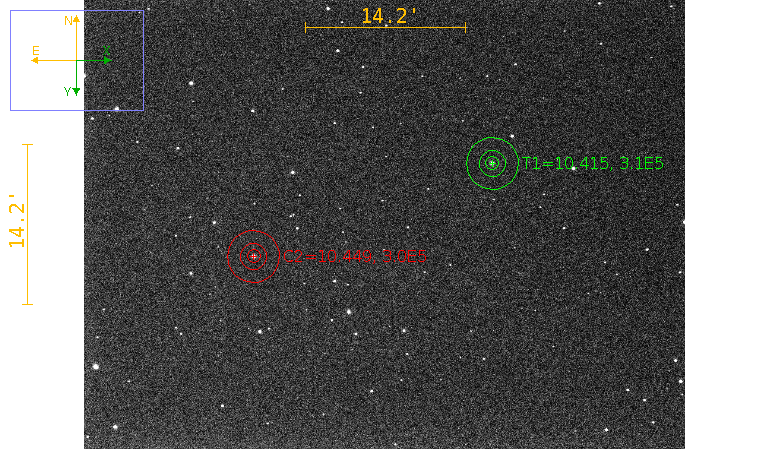

You can see how a blanket of damp air descended on me from the first frame:

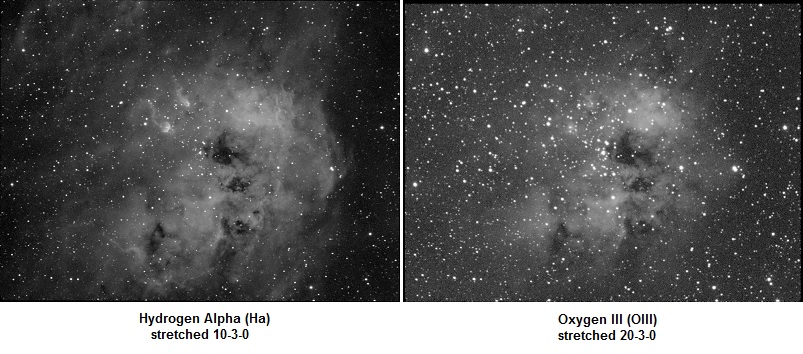

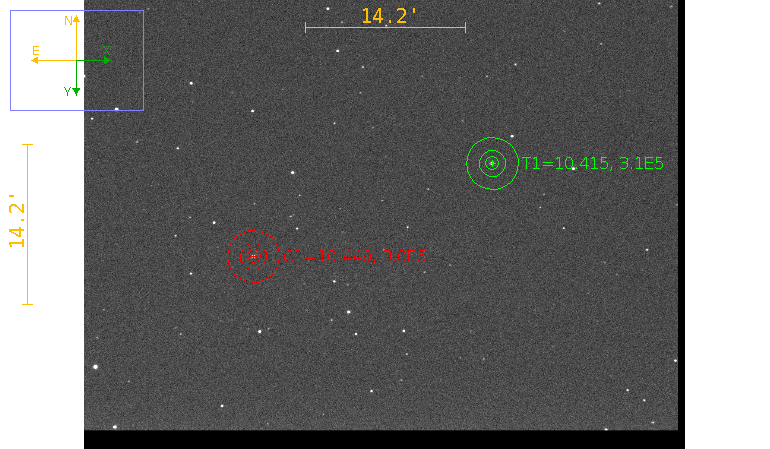

to the last frame:

I had to cut the session short after 2 hours when thick clouds arrived.

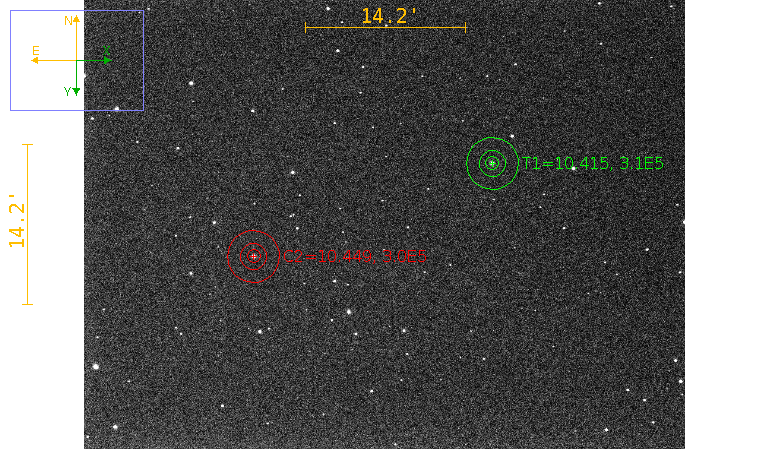

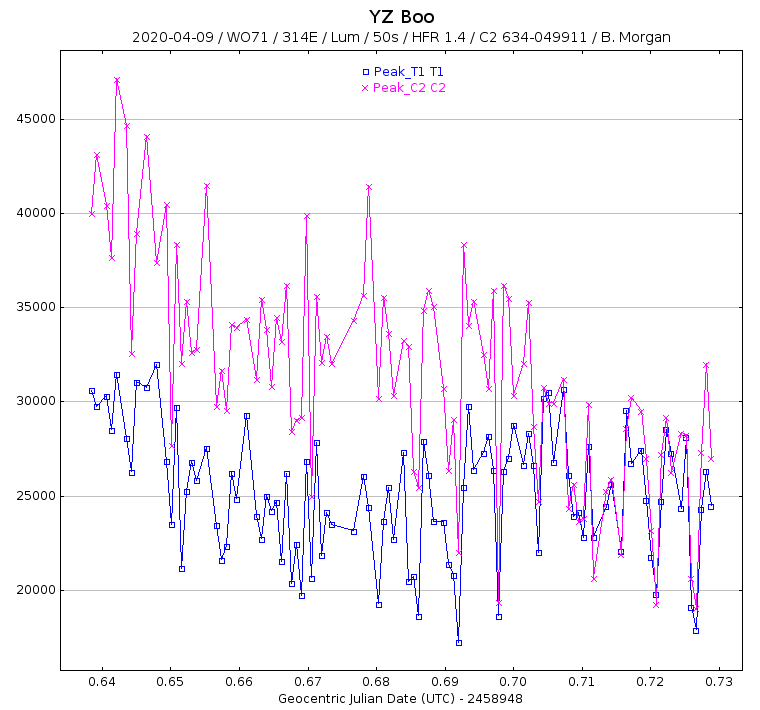

I decided on a 50s exposure that placed the star near the midpoint of the camera’s 16-bit range, around 30,000 ADU. Thankfully the field included a few constant-brightness stars, one of which I used for comparison.

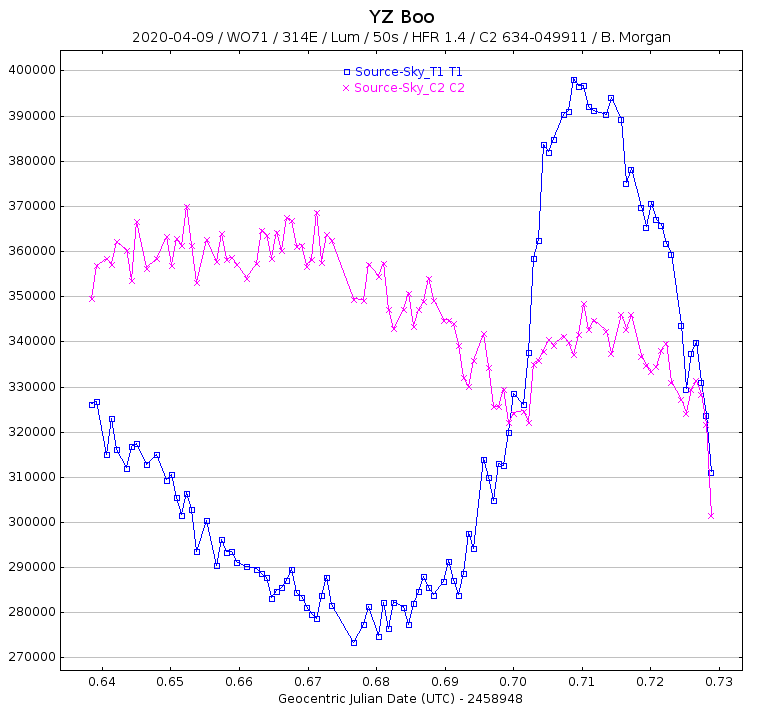

Here is a plot of the peak pixel value over time. Look primarily at the pink plot. It is the constant-brightness comparison star. You can see how the peak steadily declined as the damp air increasingly blocked starlight:

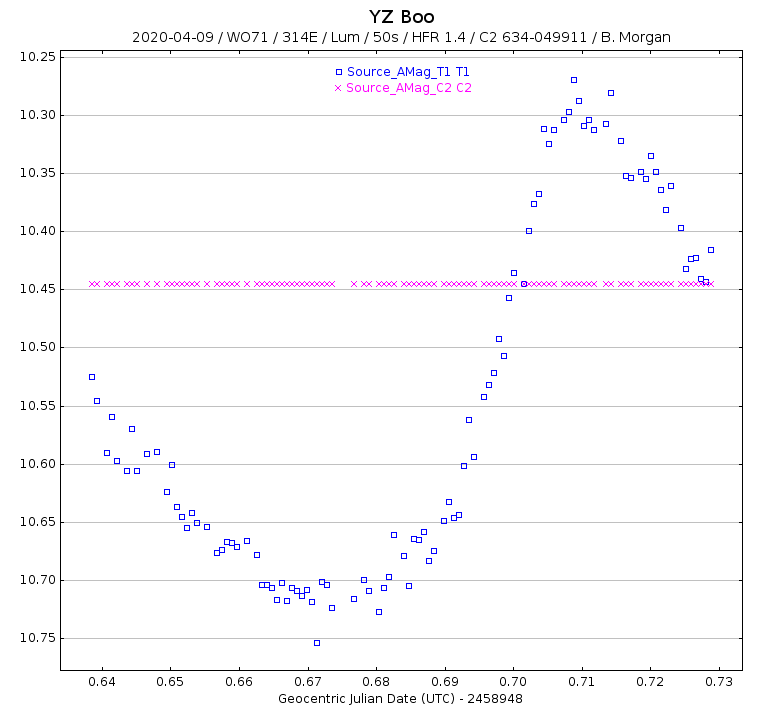

The next plot requires some explanation. If you look back at the First and Last Frames you can see that I placed an “aperture” on the variable star (T1) and the comparison star (C2). The central bulls-eye is designed to surround the star so that starlight can be integrated over its area. Then there are two annulus rings: the inner annulus and the outer annulus. The outer annulus is used to sample the sky background. The inner annulus is simply a buffer between the aperture and the outer annulus.

This plot is the difference between the aperture and the outer annulus. Notice how we are getting closer to the final light curve:

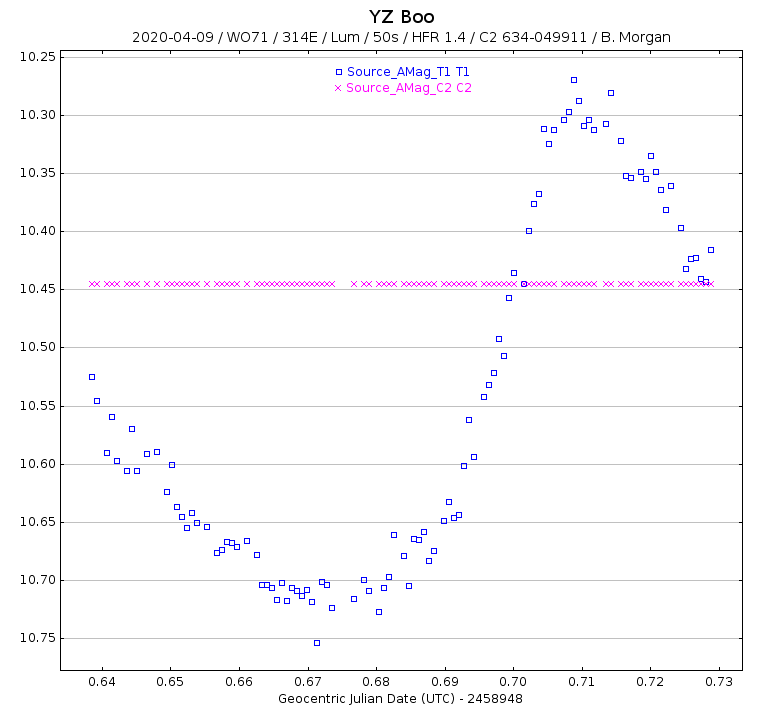

There is one step left. We need to calculate the difference between the blue plot (the variable star) and the pink plot (the comparison star). This yields the final light curve:

For comparison here is the light curve of YZ Boo contributed by members of the American Association of Variable Star Observers (AAVSO). These people have some serious equipment including CCD cameras having a Full Well Depth of 100,000 electrons:

I used a free package called AstroImageJ for calibration, alignment and photometry. It is easy to use once you run through it a couple times. Also, AAVSO’s Variable Star Index (VSX) is available online to members and non-members alike. With it you can search a vast database of variable stars that meet your criteria. You don’t necessarily need costly photometric filters to have fun. I used a standard luminance filter but I do have Astrodon Photometric “V” and “B” filters on another filter wheel.